How to Setup AWS EKS Cluster with Worker Node and Management Server

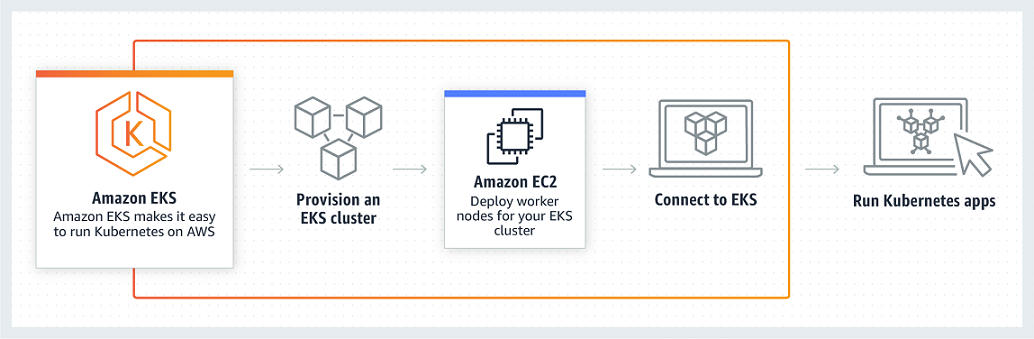

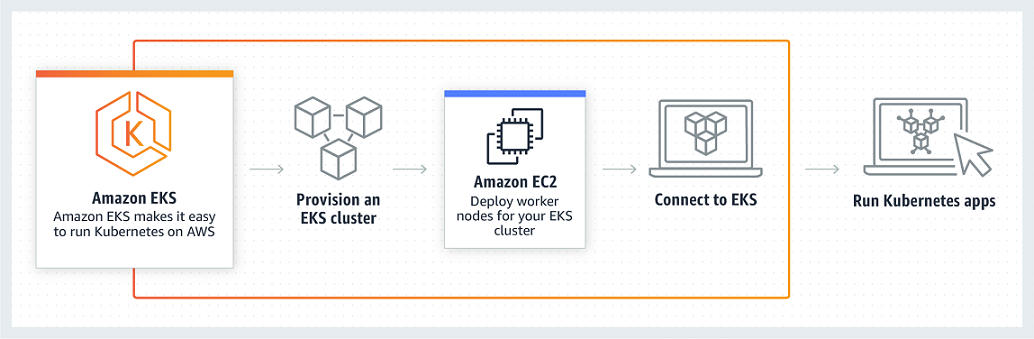

AWS EKS: Amazon Elastic Container Server for Kubernetes (EKS) is a managed service that allows us to run Kubernetes Cluster with needing to stand up or maintain own Kubernetes Control Plane.

Kubernetes is an open source System for automating the deployment, Scaling and management of the containerized application.

Amazon EKS can be integrated with many AWS services.

Kubernetes is an open source System for automating the deployment, Scaling and management of the containerized application.

Amazon EKS can be integrated with many AWS services.

- ELB for Load Balancing

- IAM for authentication

- Amazon VPC for isolation

Note: It's recommended to use the same user to provision EKS cluster and connect from the management kubectl server.

Step 1: Setup New User and IAM Role:

Before, Provision EKS cluster. We need to set up a user (Programmatic access not console) and one IAM role to provision EKS cluster and required resource on behalf of you. Let's follow the step below to create User and IAM Role.

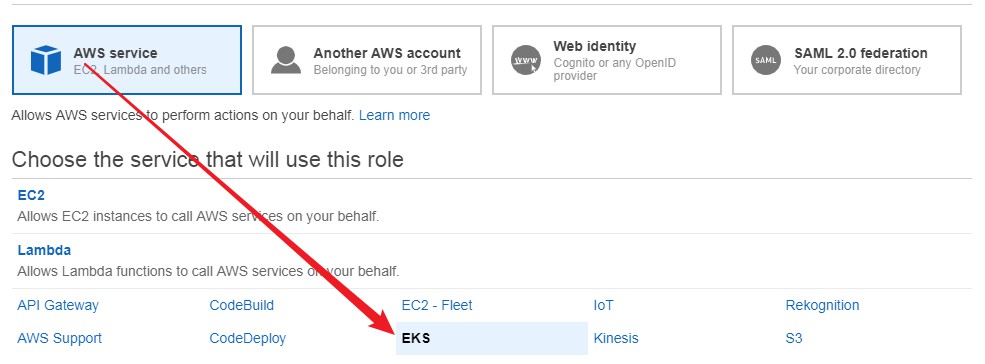

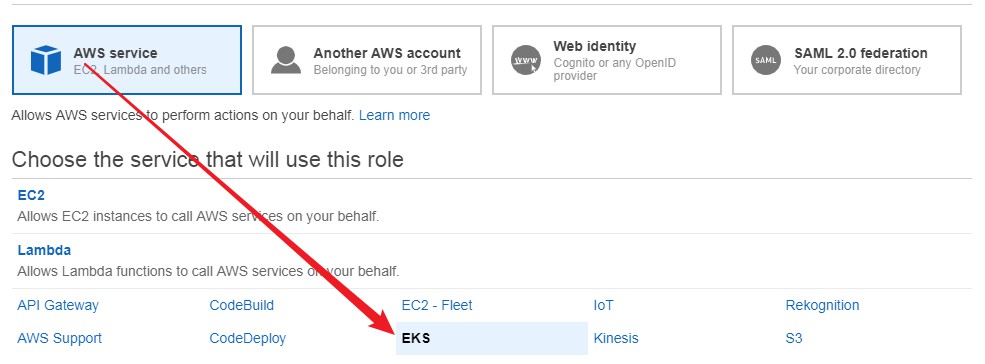

A- Create Amason EKS Service Roles:

- Log on to AWS Console

- Go to IAM

- Select Roles and choose Create Role

- Select AWS Services in Type of trusted entity

- Choose EKS

- Next: Permissions

- Again Next

- Add Tag optional

- Next

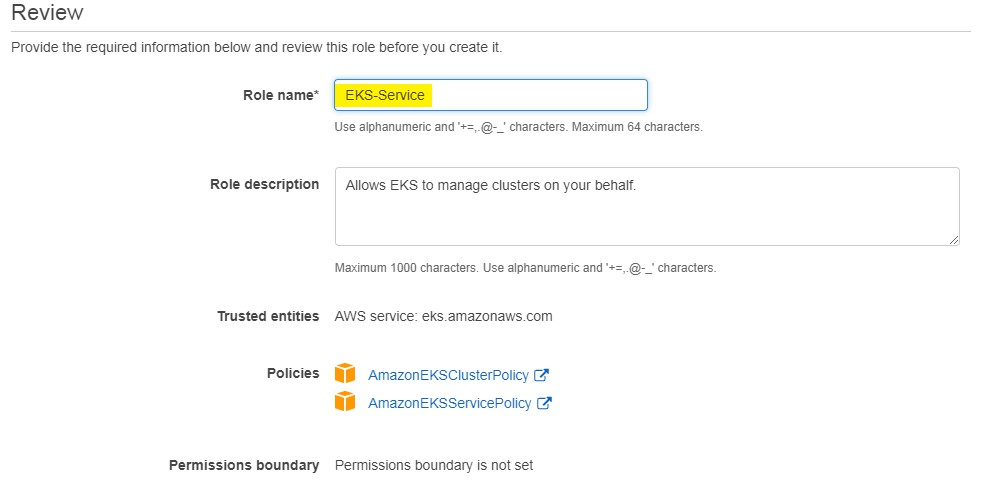

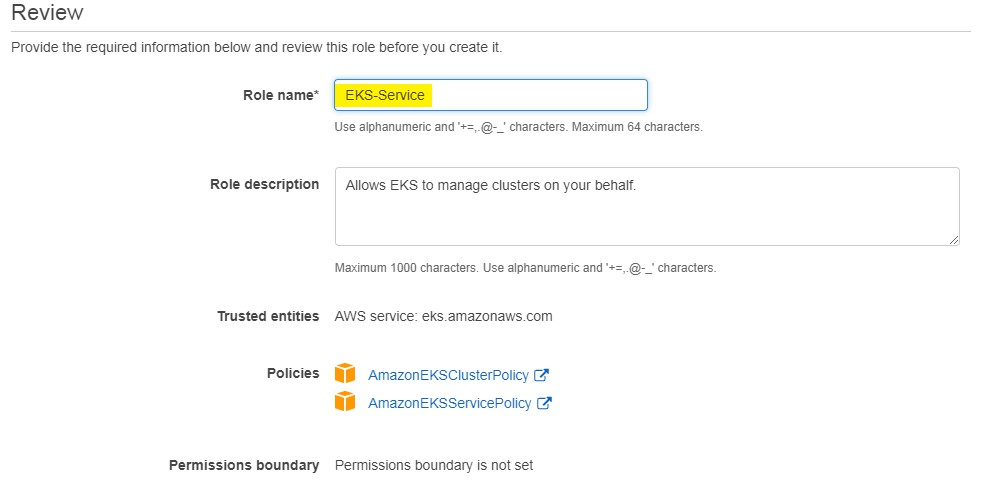

- Enter Role Name EKS-Service

- Select Create to finish and create the Role.

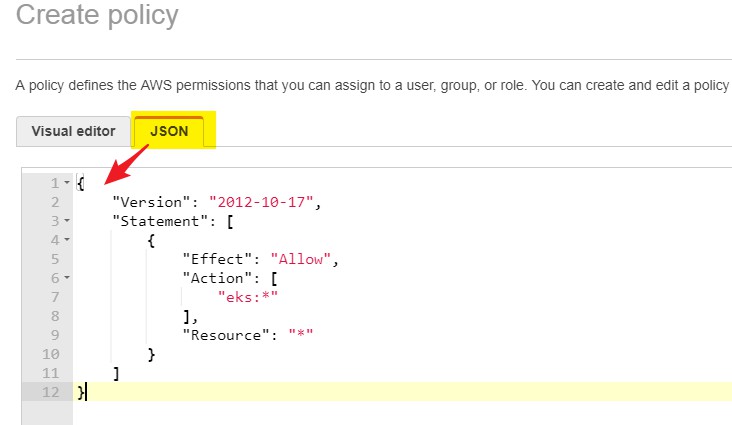

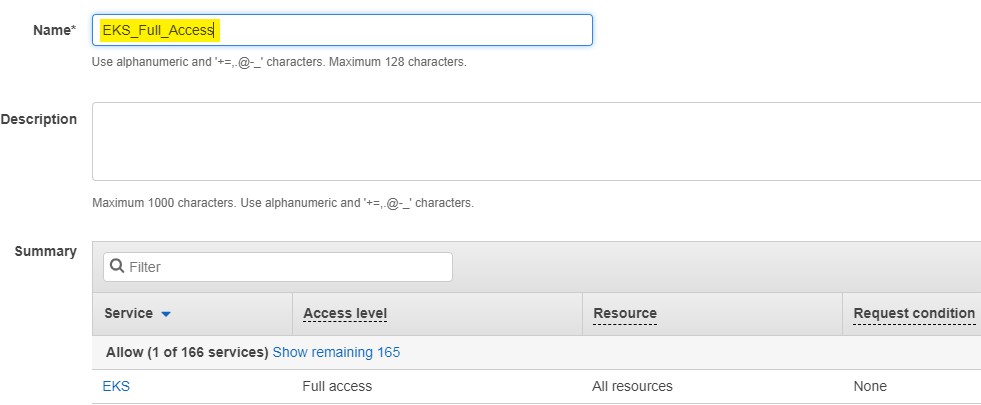

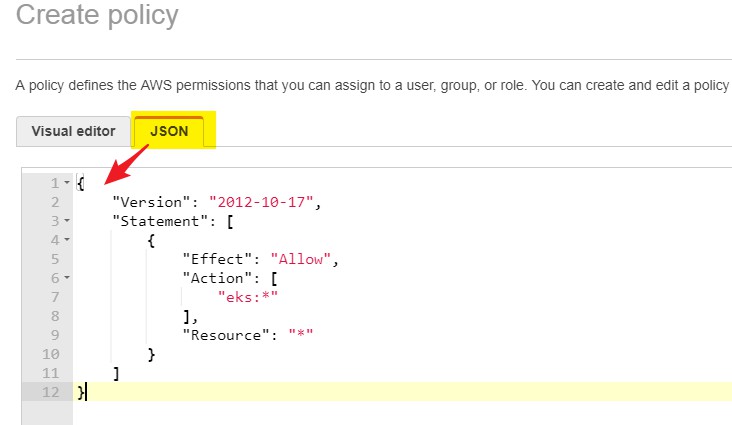

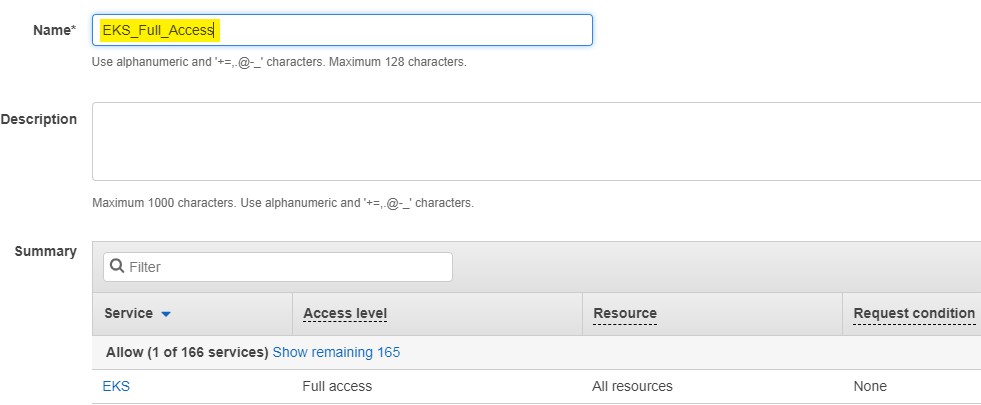

B- Create A policy to grant EKS full access

- Log on to AWS Console

- Go to IAM

- Choose Policies

- Create Policy

- Choose JSON format and paste these lines:

- Click on Review Policy

- Enter Policy Name- EKS_Full_Access

- Click on Create

EKS Full Access policy created

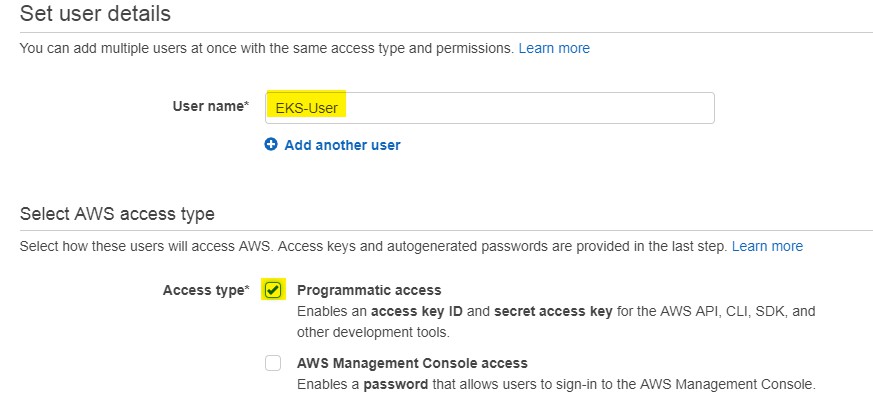

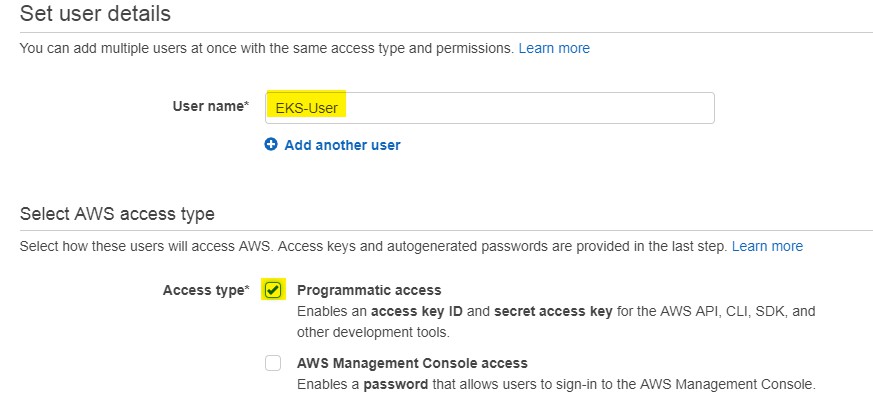

C- Create a user:

- Log on to AWS Console

- Go to IAM

- Select User and choose Add User

- On next Page Enter User Name

- Choose Access Type Programmatic access

- Select Next permissions

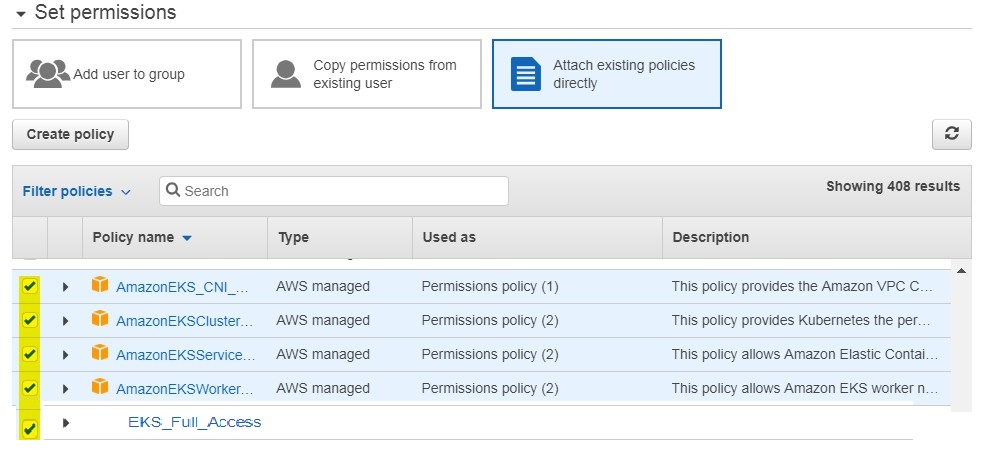

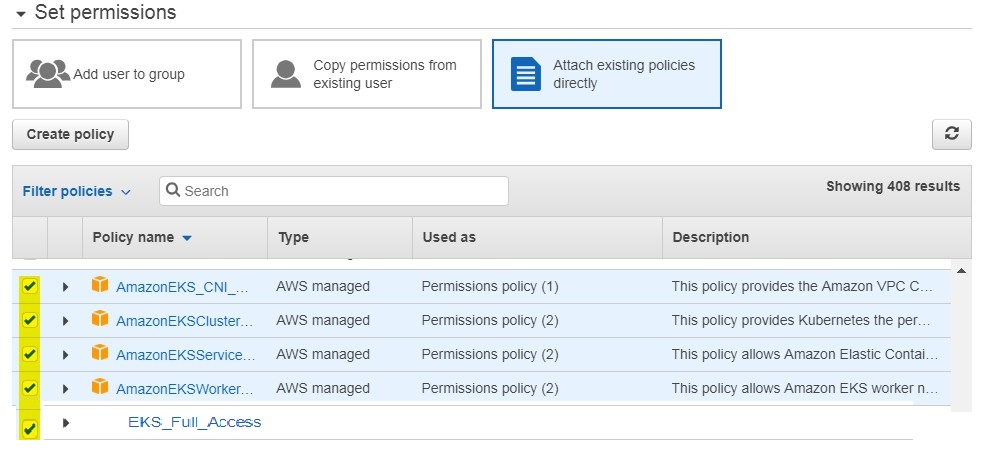

- Choose attach existing policies directly

- Select policy as in the screenshot below and Administrator also

- Next

- Add Tags optional - add a tag if you want

- NEXT

- Review final selection and Click on Create button to finish.

A User set up successfully. We will use this user to Create EKS Cluster from the AWS CLI and manage our EKS Cluster.

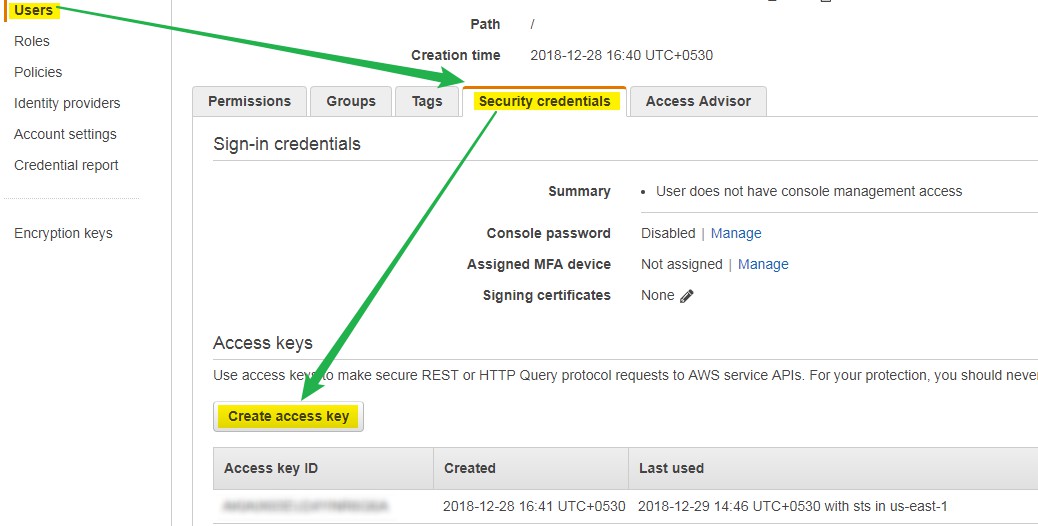

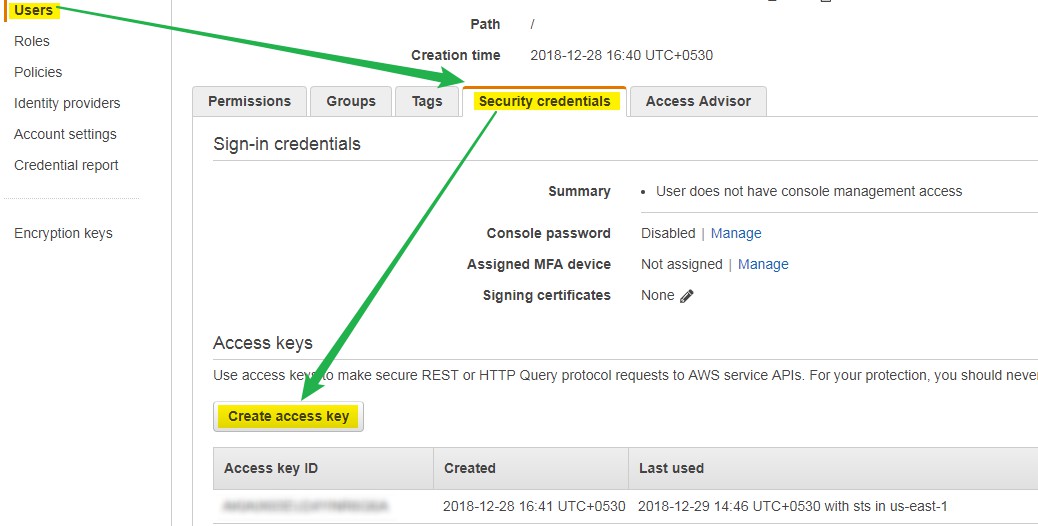

D - Generate Access and Secret Key

In order to Create and manage EKS Cluster, we need to set up AWS CLI so aws-iam-authenticator can communicate with our cluster using AWS CLI credentials profile.

- Log on to AWS Console

- Go to IAM

- Select EKS-User

- Select Security credentials TAB

- Click on Create access key

Download and keep access & secret key somewhere, We will use these key in AWS CLI configuration in the next step.

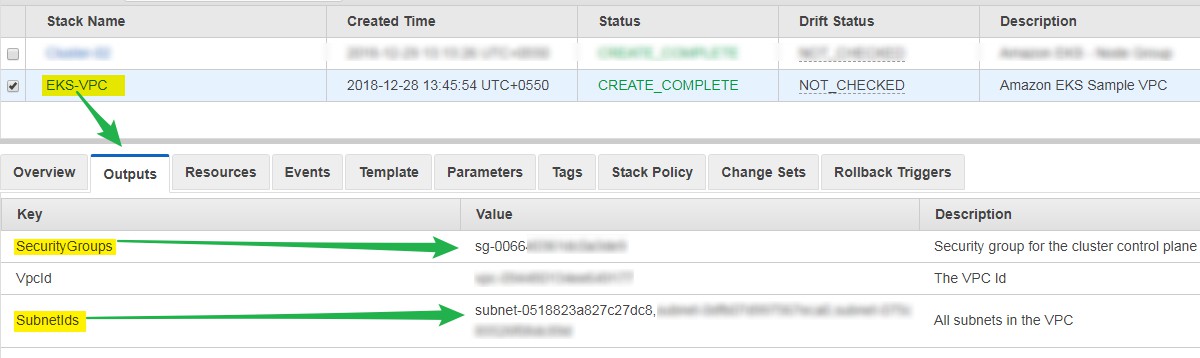

Step 2: Create Amazon EKS Cluster VPC:

We need a VPC to provision EKS Cluster and nodes, you can use existing VPC but it's best practice to create a new VPC with subnets and Security Groups. let's follow the Steps below to Create new EKS Cluster VPC.

Step 2: Create Amazon EKS Cluster VPC:

We need a VPC to provision EKS Cluster and nodes, you can use existing VPC but it's best practice to create a new VPC with subnets and Security Groups. let's follow the Steps below to Create new EKS Cluster VPC.

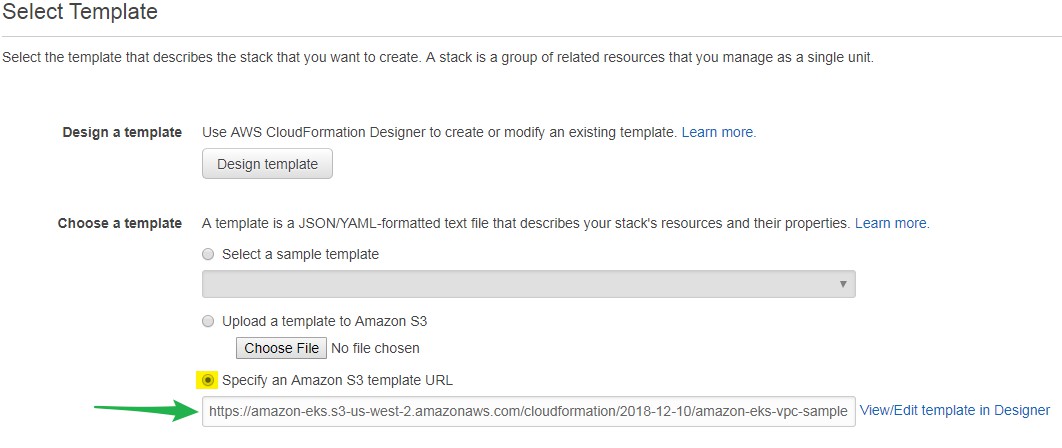

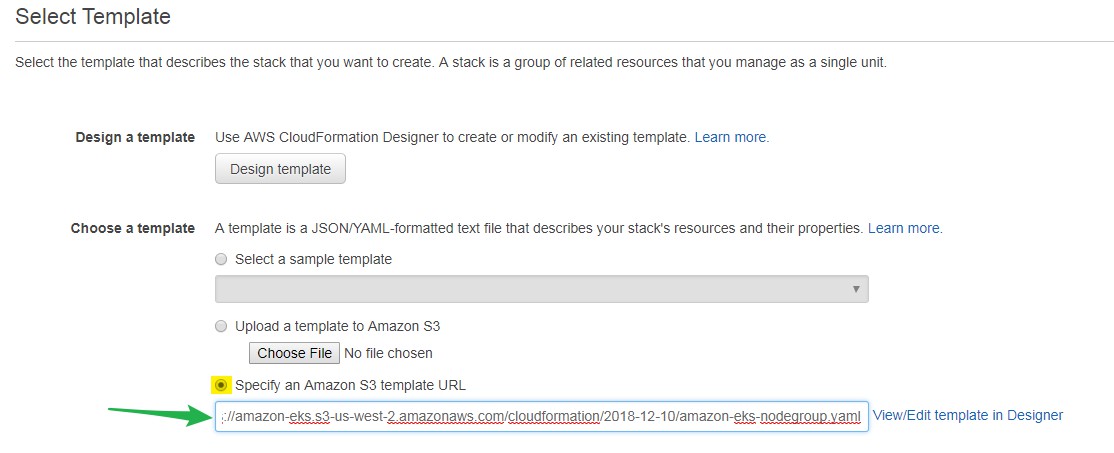

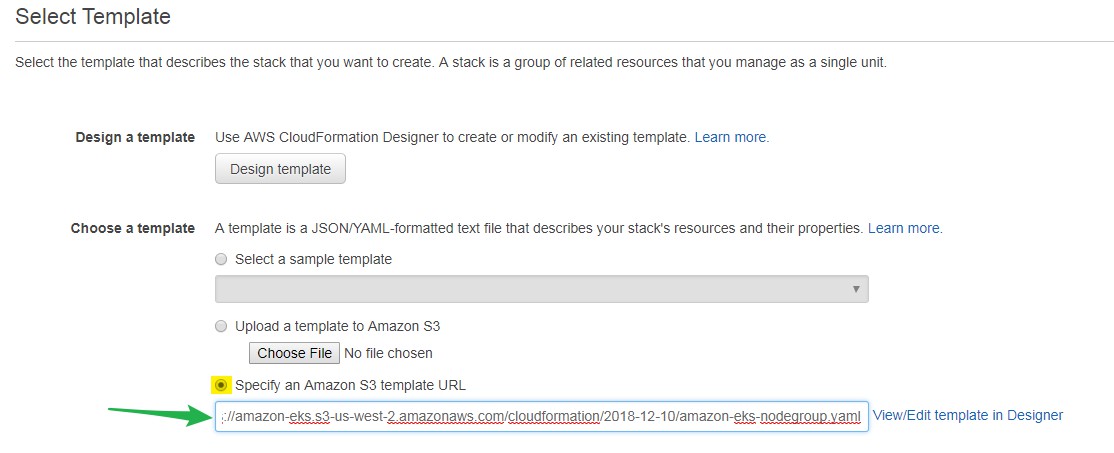

- Log on to AWS Console - https://console.aws.amazon.com/cloudformation/

- Go to Cloud formation

- Select Create Stack

- Choose Specify an Amazon S3 template URL

- Add following URL- https://amazon-eks.s3-us-west-2.amazonaws.com/cloudformation/2018-12-10/amazon-eks-vpc-sample.yaml

- Click NEXT

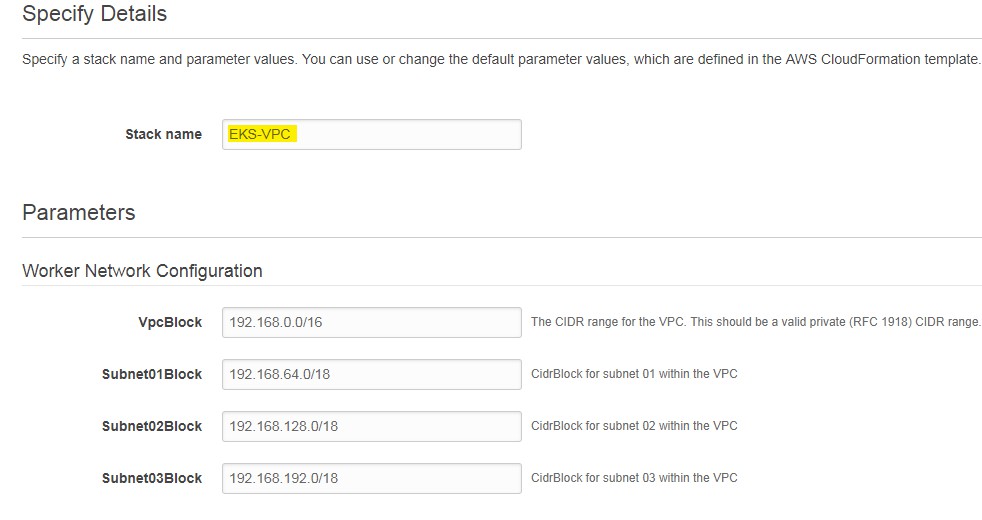

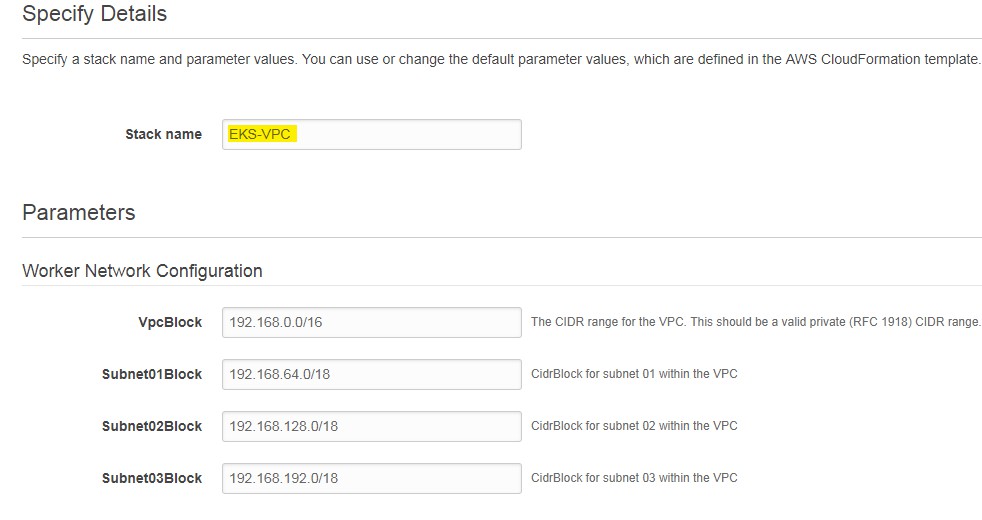

- Enter Stack Name, VPCBlock, Subnet01Block, Subnet02Block and Subnet03Block.

- click Next

- Optionally, you can specify Tag, rollback trigger and cloud watch monitoring. or Click Next to leave this section.

- Review defined configuration

- Click Create to finish and create the EKS Cluster VPC

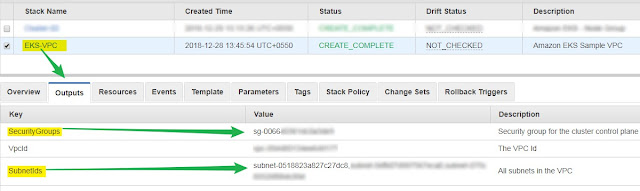

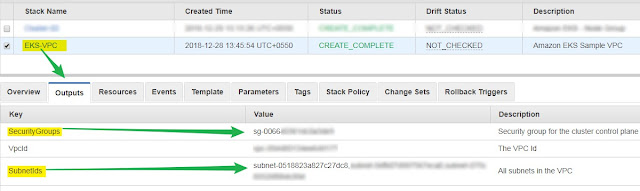

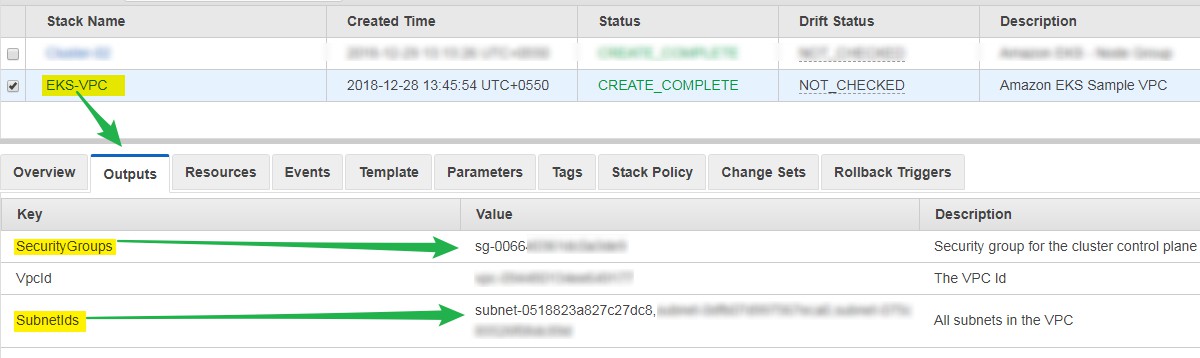

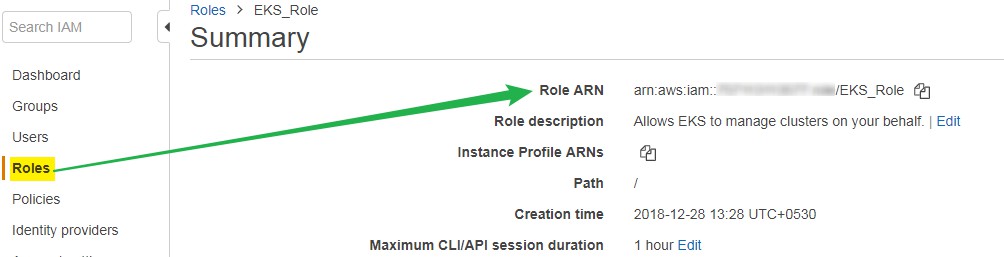

It may take 5 to 10 minutes. After that VPC will be available. Please note down Subnets and security Group ID. We will use them in AWS CLI to create EKS Cluster.

For Example, see below picture.

Step 3: Setup Management system -

We need a System to create and Manage EKS cluster. We need to install following packages to manage EKS cluster.

- AWS CLI

- aws-iam-authenticator

- Kubecutl

- access and secret keys

A- Install AWS CLI:

- To install AWS CLI use this URL: https://docs.aws.amazon.com/cli/latest/userguide/cli-chap-install.html

In my case, I am using Ubuntu 18.04. follow the command below to finish the installation.

- Check python version

if you don't have python installed, do the installation.

B- Set up aws-iam-authenticator:

C- Setup kubectl:

All three tools have been set up successfully.

D- Setup AWS CLI access and secret key:

We need to set up AWS CLI access to our AWS account, In order to provision Amazon EKS cluster and managing EKS cluster resource.

- Install python-pip

- Install awscli

- Make aws command available

- Verify installation.

B- Set up aws-iam-authenticator:

- Download aws-iam-authenticator

- Make aws-iam-authenticator executable

- Set path for aws-iam-authenticator

- Verify aws-iam-authenticator installation

Kubectl is an important tool to manage Amazon EKS cluster. let's setup kubectl

- Download kubectl

- Make kubectl executable

- Set kubectl path

- Verify kubectl installation

D- Setup AWS CLI access and secret key:

We need to set up AWS CLI access to our AWS account, In order to provision Amazon EKS cluster and managing EKS cluster resource.

- Run the following command on your system.

All done, Now management system is ready to provision Amazon EKS cluster.

Step 4: Create an Amazon EKS Cluster:

After setting up all the prerequisites, it's time to create EKS cluster.

After successful creation of EKS cluster. we can connect our cluster.

Step 6: Launch and configure EKS Worker nodes:

Step 7: Join worker Node to Cluster:

Configuration updated Successfully.

Configuration updated Successfully.

Step 4: Create an Amazon EKS Cluster:

After setting up all the prerequisites, it's time to create EKS cluster.

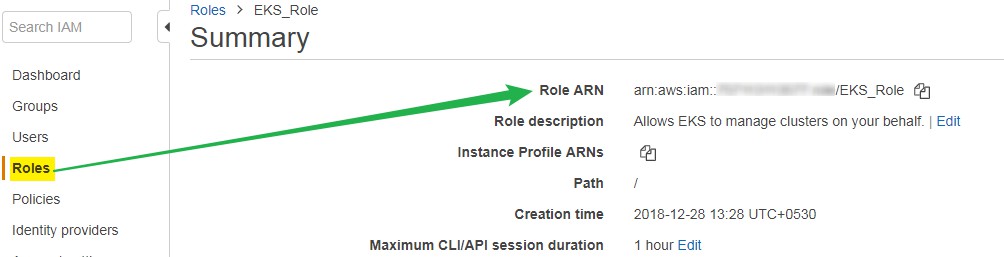

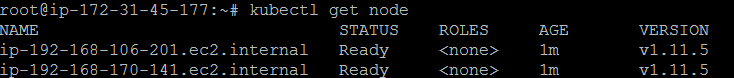

- Get Role ARN from the AWS console in the IAM section:

- Get subnets and Security group ids from cloud formations output

- Run the following command to create EKS Cluster:

- Once done, your output would be like below:

Step 5: Configure kubectl for amazon EKS cluster:

We to update kubeconfig file on your management system.

- Run the following command to update your EKS cluster details in the kubectl config file

EKS Cluster added to the kubectl config file.

- Verify Cluster Access

Successfully connected to Amazon EKS Cluster

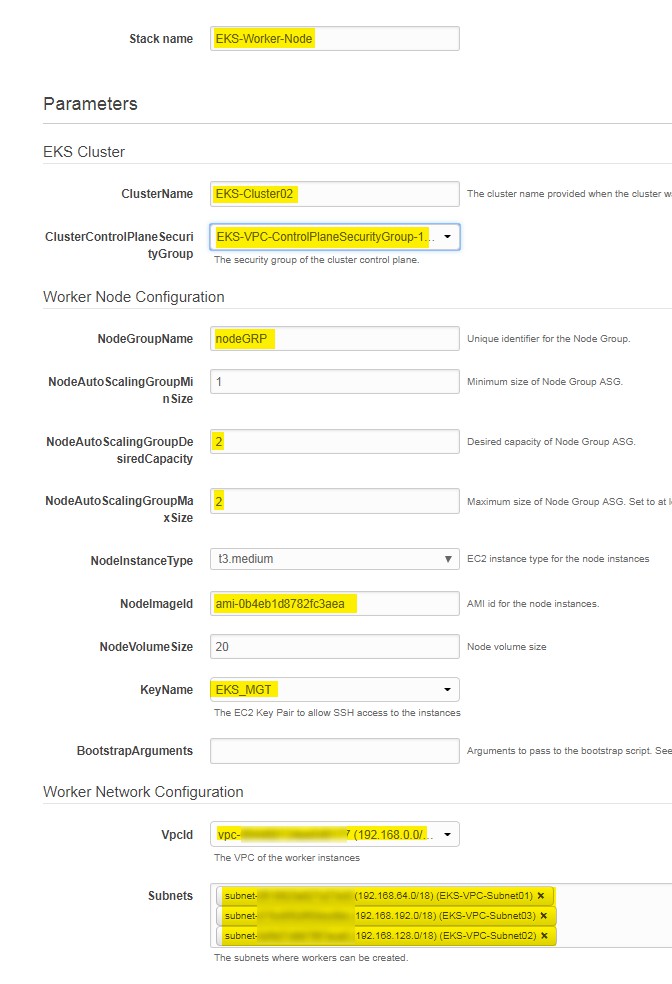

Step 6: Launch and configure EKS Worker nodes:

Now, when EKS VPC and Control plane is ready. We can launch and configure Worker nodes.

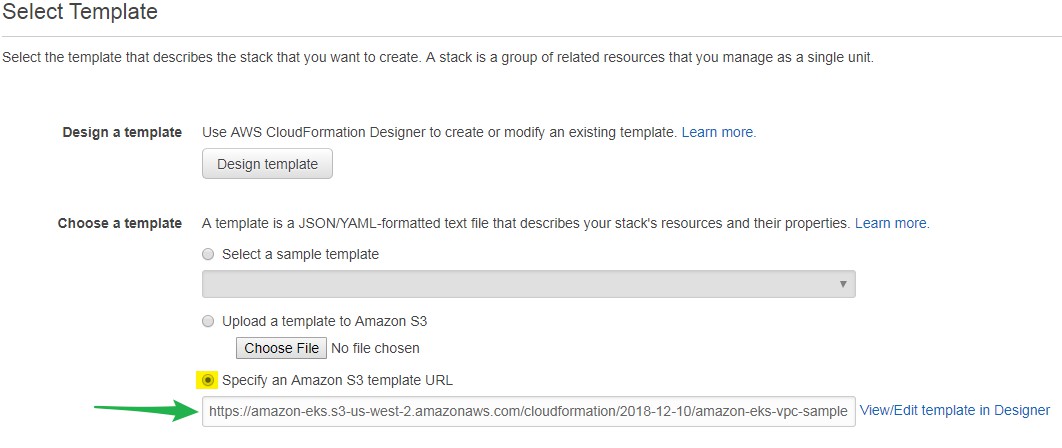

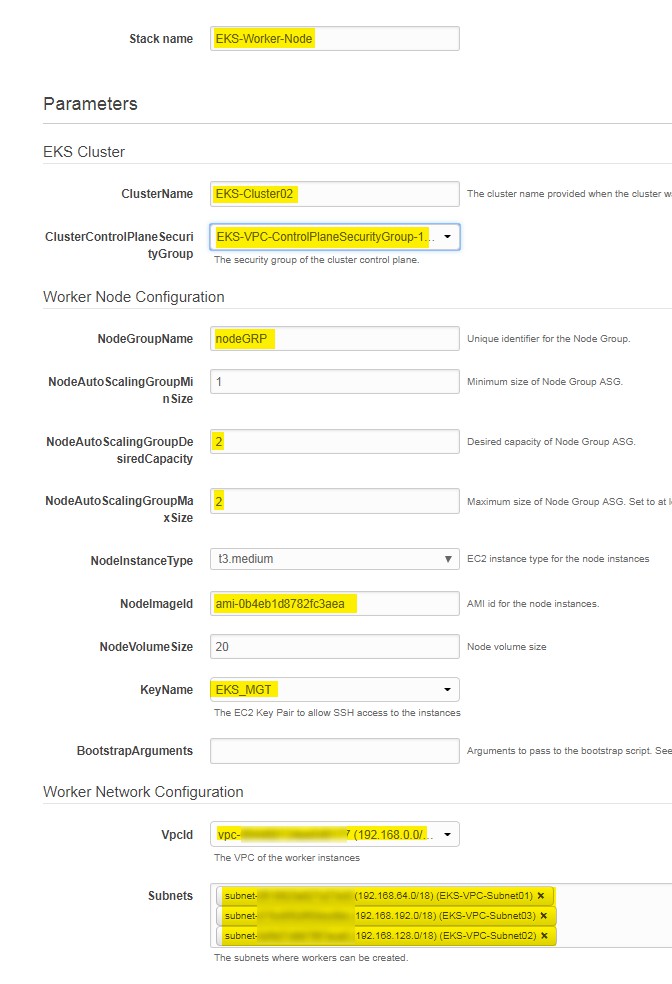

- Log on to AWS console- https://console.aws.amazon.com/cloudformation.

- Go to Cloud Formation

- Create Stack

- Choose: Specify an Amazon S3 template URL- https://amazon-eks.s3-us-west-2.amazonaws.com/cloudformation/2018-12-10/amazon-eks-nodegroup.yaml

- Next

- Give Stack name: EKS-Worker-Node

- Enter Cluster name, SSH Key and Image ID: ami-0b4eb1d8782fc3aea or you can choose ami id based on your region -

- Next

- Next page is optional you can leave it for now or choose your desire setting.

- Next

- Review your configuration & Acknowledge

- Click on Create to finish and setup worker nods.

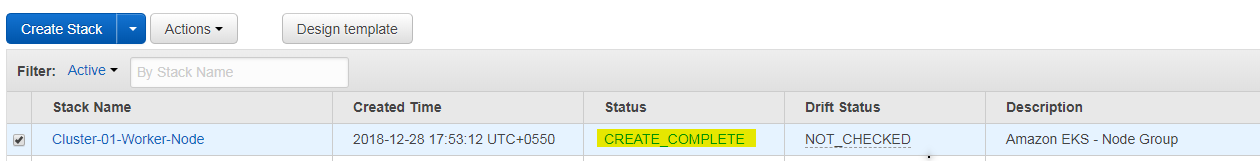

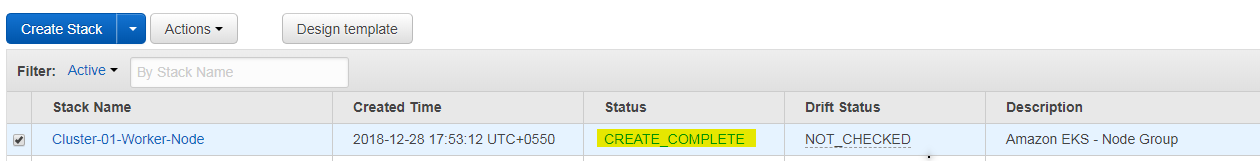

Our Worker node created successfully.

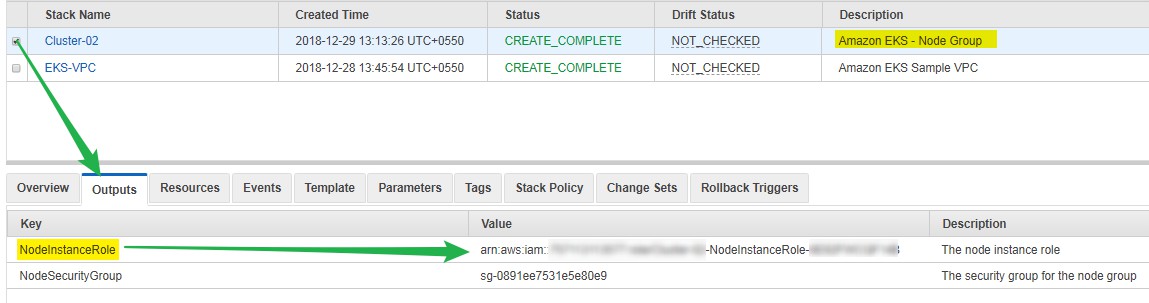

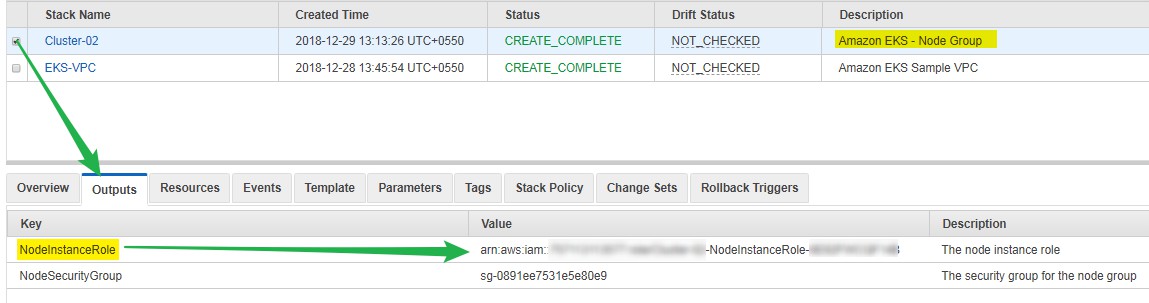

Once Worker node's stack ready, we can join them to EKS cluster.

- SSH to management System

- Get Worker node ARN from Cloud Formation Stack from AWS Console, see picture below

- Download Config Map

- Update your Worker node's ARN in the aws-auth-cm.yaml file.

- Edit the file in your favourite editor and replace yourARN with Red highlighted line.

- Save and exit from the file.

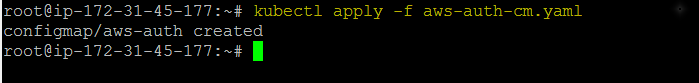

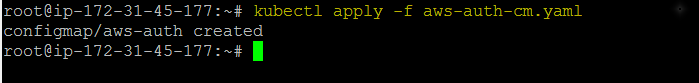

- Appy configuration

Configuration updated Successfully.

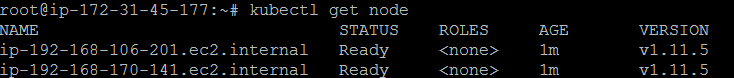

Configuration updated Successfully.- Verify Added node.

Both the node added successfully.

Finally, We have successfully provision EKS Cluster, Worker Node and A management system to manage the EKS cluster.

Finally, We have successfully provision EKS Cluster, Worker Node and A management system to manage the EKS cluster.