Google Open-Sources Hand Tracking AI "MediaPipe" for Smartphones

[the_ad id='1307']

Google has open-sourced an AI that is capable of recognizing hand shapes and motions in real-time earlier this week. This move by Google will help a lot of aspiring developers to implement gesture recognition capabilities to their app.

The software giant showed off the feature earlier in Computer Vision and Pattern Recognition(CVPR) 2019 conference which took place in June. The source code for the AI is now available on GitHub which you can check out from here. You may also download arm64 APK here, and a version with 3D mode here.

MediaPipe is a cross-platform framework which can be implemented for building pipelines to process perceptual data of different formats(audio and video). This is made possible by applying machine learning techniques to identify 21 3D keypoints of a hand from a single frame of an image.

“The ability to perceive the shape and motion of hands can be a vital component in improving the user experience across a variety of technological domains and platforms,” reads Google AI blog post.

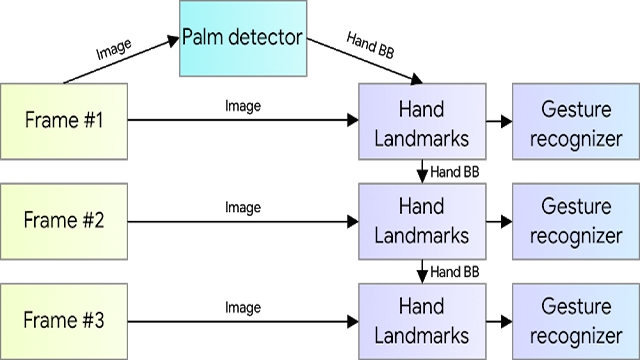

Google employs three AI models in MediaPipe which they call BlazePalm, hand landmark model, and gesture recognizer. The palm detector model(BlazePalm) is responsible for analyzing a frame and returning an oriented hand bounding box while hand landmark model is used for returning 3D hand key points from a cropped image region and gesture recognizer is used for classifying previously computed keypoint configurations into a set of gestures.

The coolest part of this hand-tracking AI is the ability to identify gestures. Researchers say that the AI is able to recognize common hand signs like “Thumbs up”, closed fist, “OK”, “Rock”, and “Spiderman”. Pretty cool, right? Take a look at the GIF below to watch the AI in action.

“We believe that publishing this technology can give an impulse to new creative ideas and applications by the members of the research and developer community at large.”, wrote Valentin Bazarevsky and Fan Zhang, Research Engineers at Google.

The future goals of the researchers at Google AI is to enhance the functionality and efficiency of the AI. This might include extended support to gestures, more fast and accurate tracking, and support for dynamic gestures.

[the_ad id='1307']

Source link

[the_ad id='1307']