The History of Smallpox

Slaying the speckled monster

November 21, 2019 · 25 min read

Smallpox was one of the worst diseases in human history. It killed an estimated 300 million people or more in the 20th century alone; only tuberculosis and malaria have been more deadly. Its victims were often children, even infants.

It is also, among diseases, humanity’s greatest triumph: the only human disease we have completely eradicated, with zero naturally occurring cases reported worldwide, even in the most remote locations and the poorest countries, for over forty years.

Along the way, it led to fierce religious and political debates, some of the earliest clinical trials and quantitative epidemiology, and the first vaccines.

This is the story of smallpox, and how we killed it.

The speckled monster

Smallpox, like many diseases, first presents as a fever. This was often accompanied by malaise, headache or backache, and vomiting. After a few days, these symptoms subsided somewhat, but the worst phase was just about to begin. Red marks appeared on the face and inside the mouth, spreading all over the body; the marks grew into pustules, or “pocks”. This characteristic rash, hideous to see and painful to touch, might contain thousands of pocks; in the worst cases, there were so many that they ran together and covered the skin. The pocks lasted a couple of weeks before scabbing and falling off. I won’t include a picture here, but if you have the stomach for it, see the images on Wikipedia.

The disease was highly fatal: mortality estimates at different places and times vary between one in six and one in three, and higher in particularly susceptible populations. In 18th-century England, it caused about half of the deaths of children under age ten. Survivors were usually left with deep scars, including on the face; many young women lost their beauty to the disease, and an unlucky few were blinded by scars over the cornea—it was once the leading cause of blindness in Europe.

It was highly contagious, so it struck in epidemics. Thus the actual suffering of the disease was compounded by the anxiety it caused in the healthy, and the anguish felt by those who passed it on to their loved ones.

There was no cure.

Humanity has suffered from smallpox for a long time. It was unequivocally identified as early as AD 340 in China, and it may have killed Ramses V, pharaoh of Egypt in the 12th century BC, whose mummy has a pustular rash on the face. Many civilizations had gods or goddesses of smallpox, such as Sitala in India or Sopona in Nigeria. The Plague of Athens in 430 BC, based on Thucydides’s description, may have been smallpox. With globalization beginning in the 1400s, it spread to southern Africa and the Americas—usually inadvertently, sometimes as a biological weapon. Many of the native peoples of the Americas lost half their population to the sudden onslaught, and its devastation helped assure the Spanish conquest of the Aztec and Inca empires.

George Washington caught it in the West Indies at age 19; Abraham Lincoln got it around the time of the Gettysburg Address. Had either of them not survived the disease, American history might have gone quite differently.

Smallpox had one blessing, which people noticed long ago: if you survived it, you would never get it again. This even led to a theory that the cause of the disease included some innate seed, present in everyone; some poison in the blood that could be activated by the wrong trigger, but then expelled from the body for good. (The truth, of course—that the disease was a war fought among particles of organic matter, too small to see; that the body itself was the battleground; and that the soldiers enlisted to defend their homeland could be trained to recognize the enemy and thus to fight off future invasions—was perhaps far stranger.)

The disease was greatly feared. During the American Revolution, John Adams wrote that it was “ten times more terrible than the British, Canadians, and Indians together” and Governor Trumbull of Connecticut wrote, “The smallpox in our Northern army carries with it greater dread than our enemies. Our men dare face them but are not willing to go into a hospital.” Business in a small town could be destroyed by the news that an epidemic had struck.

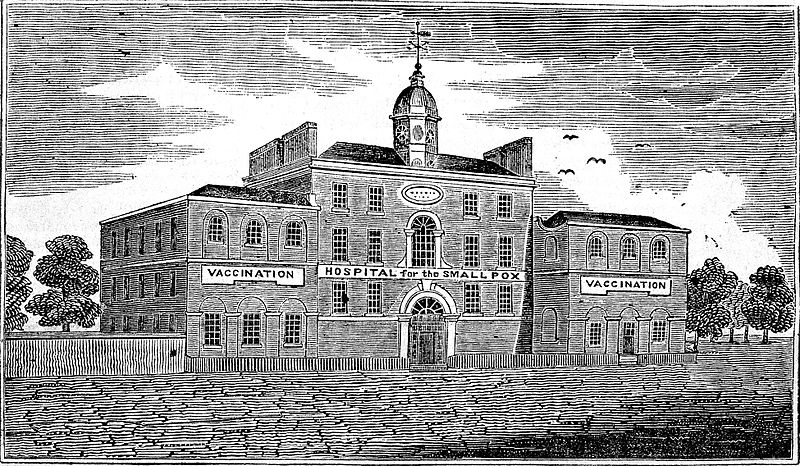

At the same time, in crowded cities, the disease grew so common that it became accepted as a fact of life: everyone got it sooner or later. The 1746 charter of the London Smallpox Hospital likened it to “a thorny hedge through which all must pass, and some die, to reach a field of safety.”

In fact, people considered themselves lucky if they had had a mild case in childhood. That way they got past it, gained lifetime immunity, and hopefully avoided terrible scarring.

Which led to a simple, “so crazy it just might work” idea:

Why not give yourself smallpox on purpose and just get it over with?

Inoculation: the first defense

That was the idea of smallpox inoculation: deliberately communicate a mild form of the disease in order to confer immunity.

Inoculation began as a folk practice. The inoculator, in one version, took contagious matter from the pocks of an infected person, put the liquid on a needle, and pricked the skin of the patient. They developed the fever in 7–9 days and passed through all the symptoms in a few weeks.

No one knew why, but the disease contracted in this way seemed to be milder and less deadly. (The best modern theory is that the body has a more effective immune response if the virus enters through the skin rather than the respiratory system.) In any case, inoculation let people choose when they would face smallpox: it was dangerous when you were too young, too old, or especially when pregnant. It also let people face the disease at home, under the care of loved ones, instead of being struck with it while traveling, and it let them isolate themselves to avoid passing it on to others.

The practice was not widely known in Europe before the 1700s, but it was well established in at least China, India, parts of the Middle East including the Ottoman Empire, some parts of Africa, and parts of Wales, where it was known as “buying the pox”. It protected those who received it, but it wasn’t used widely enough to be an effective public health measure, and so epidemics still raged.

Inoculation had spread haphazardly, through travelers who learned of it in distant lands. But it was about to encounter two phenomena it had never met—science and capitalism. And these would catapult it from a folk practice into, ultimately, a worldwide campaign to wipe smallpox off the face of the earth.

The inoculation debate

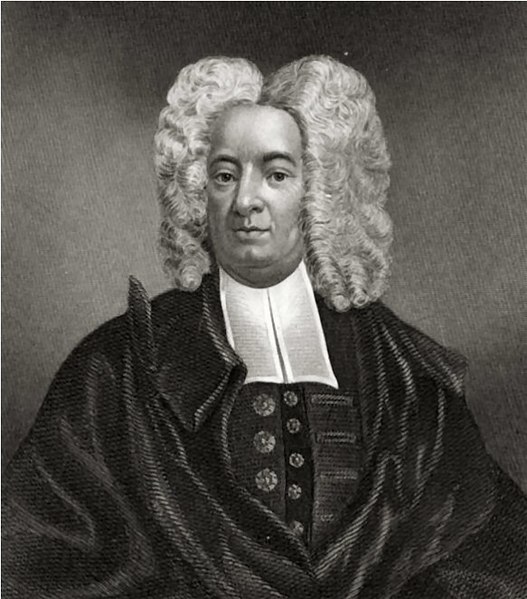

Inoculation was formally introduced to the West in the early 1700s, through letters to the Royal Society, the premiere scientific organization of the era. In 1721, it gained strong proponents in both Britain and the American colonies: In London, Lady Mary Montagu, wife of the British ambassador to the Ottoman Empire, advocated for the practice after encountering it in what is now Turkey; she had suffered the disease herself and had lost her brother to it, and she eagerly inoculated her own children. In Boston, the cause was taken up by Cotton Mather, a Puritan minister, who learned of it from a slave of his who had been inoculated in Africa; and by a doctor, Zabdiel Boylston.

Inoculation faced a lot of opposition at first. On its face, the idea seems mad—deliberately inducing one of the worst diseases? And there were true concerns: Inoculated patients suffered real symptoms, and some died. Advocates of inoculation believed that the symptoms were milder, and death rarer, but opponents disagreed. Worse, inoculated patients were still contagious, and anyone who caught the disease from them suffered the full, dangerous version, not a mild form. Thus inoculation, if improperly handled, could start an epidemic.

A great debate ensued. Religious leaders featured prominently—on both sides. The Reverend Edmund Massey gave an entire sermon against it in 1723. According to Defying Providence, a history of inoculation:

He chose to consider two points “For what causes are disease sent among mankind?” and “Who is it that has the power of inflicting them?” In addressing the first of these questions he decided “disease is sent either for the trial of our faith, or for the punishment of our sins.” In either situation, preventing disease interrupts God’s plan. Without the miseries of smallpox, he argued, faith could not be tested. Without the horrible consequences of infection our sins go unpunished; a catastrophe as unpunished sins lead straight to hell. Without threat of punishment, what vile, lascivious practices might we all indulge in? To the second query he replied “God sends disease”. Therefore it is a sin for man to claim God’s prerogative by artificially transmitting smallpox.

Mather fought back, calling inoculation a gift from God, and arguing that it was a moral requirement, according to one’s duty to God to protect one’s health. (Mather was also a prominent prosecutor in the infamous Salem witch trials, though, so he didn’t get everything right.)

Arguments from Providence could seemingly be bent to prove whatever one wanted. If you were against inoculation, it was God’s will that we get smallpox; if you were for it, then it was God’s will that we avoid the disease. Whether inoculation was a gift from God in accordance with His will, or a human intervention that went against it, was apparently a matter of perspective.

Proving the superiority of inoculation

With the religious debates unresolvable, there was only one way to settle the matter: with data.

Physician Thomas Nettleton began collecting data on smallpox cases: the number of natural smallpox cases, and the number of inoculations, and how many died of each. In 1722 he sent these figures to James Jurin, secretary of the Royal Society. Jurin expanded the effort, putting out a general call for as many such studies as he could collect.

The idea of doing a quantitative study like this seems obvious today, but at a time when medicine was still dominated by tradition and authority, it was cutting-edge. Newton had recently demonstrated the power of mathematics in physics; Jurin was thus inspired to apply math to medicine.

By 1725, Jurin had collected 14,559 cases of natural smallpox and 474 inoculations, from both England and America. The answer was clear: natural smallpox killed about one in six who contracted it; inoculation killed less than one in fifty. Inoculation was dangerous by the standards of today’s medical procedures, at a ~2% death rate, but it was about ten times safer than smallpox.

And improvements were coming that would make it safer still.

Making inoculation safer & easier

With the virtues of inoculation demonstrated, it spread throughout England, and eventually to much of Europe. But it didn’t become universal, because it was still time-consuming, expensive, painful, and risky. And these problems were self-inflicted by the groundless practices of Western physicians.

In the original Middle Eastern practice, a small scratch was made in the skin with a needle. For unclear reasons, English physicians transformed this into an incision with a lancet, which was unnecessarily painful and often became infected. Further, influenced by then-current unscientific theories of health (including the humoral theory that dated back to Hippocrates), physicians made their patients undergo a lengthy “preparation” of bland diets, enemas, and vomiting, and treated them with “medicines” including mercury (which is toxic). Altogether, this method took many weeks, taking patients away from their jobs or farms, and carried an unnecessary risk of complications.

Inoculation was greatly improved by country surgeon Robert Sutton and his son Daniel, after Daniel’s older brother almost died from an inoculation gone wrong. Through experimentation, Robert Sr. discovered that the incision could be replaced with a small jab from the lancet. Daniel, going further, found he could reduce the “preparation” from a month to 8–10 days; he also had patients walk around outdoors or even work when they were not contagious, instead of staying bedridden. In the 1760s, the Suttons ran a booming business in inoculation houses. They advertised how convenient and mild their treatment was, boasting that most patients had no more than twenty pustules, and could return to their lives within three weeks. They opened multiple locations and eventually an international franchise. Catherine the Great invited Daniel Sutton to Russia to inoculate her in 1768 (he declined; another inoculator, Thomas Dimsdale, accepted and she made him a Baron).

In 1767, William Watson, a physician at the Foundling Hospital in London, tested different “preparation” regimens in one of the earliest clinical trials (n=31). In three groups, he tested a combination of laxative and mercury, laxative only, and no preparation as a control. To make the study more precise, he counted the pocks on each patient as a quantitative measure of disease severity. He concluded that mercury was not helping the patients (and with modern statistical methods, he could have seen that the laxative wasn’t, either).

In the 1780s, physician John Haygarth recorded every case of smallpox in his town of Chester and every contact they had, to discover exactly how the disease spread—what we would now call “contact tracing”. Through this he disproved the myth that smallpox could spread over long distances, or that it was dangerous to even walk by the house of a patient. Instead he showed that it could only be transmitted through the air over about 18 inches, or through contact with infected material such as clothing. Based on these discoveries, Haygarth proposed a list of “Rules of Prevention” that amounted to isolation of patients and washing of possibly infected material. But they could never be enforced consistently enough to prevent outbreaks. That would require a new technology.

Fortunately, that technology was just about to arrive.

The origin of vaccines

Through these improvements, the mortality rate of inoculation was eventually reduced to less than one in five hundred. As it became safer, more convenient and more affordable, it spread throughout England. Entire country towns received it. And when this happened, the inoculators noticed something:

Some people were already immune.

Of course, if you had had smallpox before, you’d have immunity. But some cases couldn’t be explained by previous smallpox infection. No one knew what to make of it, until in 1768 a farmer came for inoculation by country surgeon John Fewster. The farmer, who had no response to multiple inoculation attempts, said that he had never had smallpox, but that he had recently suffered from cowpox.

With this clue, Fewster started questioning his patients about cowpox, and found that cowpox explained the cases of pre-existing immunity. He reported the finding to a local medical society, and eventually it became known to a nearby surgeon’s apprentice named Edward Jenner. (The origin story that is usually told, where Jenner learns of cowpox’s protective properties from local dairy worker lore or his own observations of the beauty of the milkmaids, turns out to be false—a fabrication by Jenner’s first biographer, possibly an attempt to bolster his reputation by erasing any prior art.)

Jenner saw an opportunity. Even in the best case of inoculation, the patient experienced mild symptoms; the worst case was serious disease or death. And during the treatment, the patient was still contagious, which meant quarantine—and if the quarantine failed, the risk of an epidemic. Cowpox wasn’t a disease anyone wanted to have—but it was not deadly, and it could never spread smallpox.

But could cowpox be substituted for smallpox? Fewster was skeptical, because some patients who had reported cowpox were not immune to smallpox. Through careful research, Jenner untangled the confusion: there were multiple diseases that looked like cowpox. Jenner learned to differentiate between true cowpox, staphylococcal infections, and another disease called “milker’s nodes”. When properly identified, cowpox clearly did confer immunity to smallpox.

Jenner tested his technique in 1796 and published it soon after. Since the Latin name for smallpox was “variola”, Jenner called cowpox “variolae vaccinae”, or “smallpox of the cow”. Later, to distinguish the two methods, inoculation with smallpox was called “variolation”, and with cowpox, vaccination. (Decades later, when Louis Pasteur extended the technique to other diseases, he deliberately extended the meaning of “vaccination” in honor of Jenner, giving the term its modern definition.)

Vaccination turned out to have one drawback: unlike inoculation, it wore off after a matter of years. This was solved with periodic revaccinations.

Like inoculation before it, vaccination was controversial and Jenner had to fight for its acceptance. Then as now, the technique stoked unfounded fears—one cartoon showed vaccine recipients growing cow parts out of their bodies. But the method worked, it was safer than variolation, and eventually it gained acceptance.

Improving the vaccine: safety and preservation

The next 150 years saw a series of incremental improvements that helped vaccination spread to most of the population in much of the world.

Think about the challenges of large-scale vaccination: When a patient shows up for the procedure, where do you get the vaccine fluid? You can’t just manufacture it in a factory, like textiles or pins, from raw materials. You can’t harvest it from plants like produce. And in Jenner’s era, you couldn’t get it from the refrigerator, which wouldn’t be widely available for more than a century.

Instead, the initial method of vaccination, like variolation, was “arm-to-arm”. That is, the virus was taken from the pustule of a previous patient and transferred to a new one: like a blood transfusion, but for a virus. In an era before disease screening—before the germ theory, even—well, you can imagine the risks. On multiple occasions, patients to be vaccinated accidentally contracted syphilis, which had been undiagnosed or misdiagnosed in the source patient. (Protection from the smallpox was not worth receiving the Great Pox.)

For this reason, by the late 1800s, the arm-to-arm method was abandoned in favor of growing the virus on calves and harvesting it from them directly. Transferring from an animal directly to a human had a reduced risk of transmitting disease, since not all human diseases can exist in cows. But some can, especially general bacterial infections. To combat this, anti-bacterials were added, starting with glycerin, and later, when they were available, antibiotics.

The other challenge was: where and when do you harvest the vaccine, and if the answers are not “here” and “now”, how do you transport and store it? Before we solved these problems, the infected cow was sometimes literally walked around town for vaccinations, or brought to the town hall where patients could line up.

Small amounts of vaccine could be stored for a short time on ivory points, between glass plates, on dried threads, or in small vials. But the virus would lose its effectiveness quickly, especially when subject to heat. When King Charles VI of Spain sent a vaccination expedition to the Americas as a philanthropic effort in 1803, the crew took 22 orphan boys: one was vaccinated before they left, and when his pustule formed, a second boy was vaccinated from the first, arm-to-arm; and so on in a human-virus chain that sustained the vaccine during their months-long voyage across the Atlantic.

Degradation, especially from heat, is a general problem affecting organic material. There are two basic solutions: refrigerating (or freezing), and drying. Before refrigeration, or when it was expensive or otherwise impractical, such as in tropical regions during the World Wars, drying was necessary. The challenge is that the simplest way to dry a material is to heat it, and heat is what we’re trying to protect the material from. Further, drying would often cause proteins to coagulate, making it difficult to reconstitute the material.

The solution, developed in the early 1900s, was “freeze drying”. This technique involves rapidly freezing the material, then putting it under a vacuum so the ice “sublimates”: that is, water vapor evaporates directly off the ice without ever melting into water. A secondary drying process (involving mild heat and/or a chemical desiccant) removes the remaining moisture, and the result is dry material that has not been damaged in structure. If properly sealed off from moisture in the air, the material will last for a long time, even when subject to heat, and it can easily be reconstituted by adding water. Freeze-drying was first applied to blood transfusions in the 1930s; Leslie Collier, in 1955, found that it allowed the smallpox vaccine to last several months even at 37° C (98.6° F), which was suitable for tropical climates.

Humanity turns the tide against smallpox

With a safe, effective, portable vaccine, the world had the tools to dramatically reduce smallpox—and ultimately, to completely eliminate it.

The controversy over vaccination never completely disappeared. It would become popular when an epidemic struck, then lose favor when the disease abated. Multiple countries, at different times, tried to make it compulsory; those attempts often met with strong opposition. The actual risks of infection mentioned above gave people real cause for concern, at least until those problems were solved in the mid-1900s.

But the practice spread regardless, and smallpox was pushed into retreat. It ceased to be endemic in much of Europe by the 1930s, the US and Canada by the 1940s, and the rest of the developed world by the 1950s. Australia, which did not see the disease until European explorers arrived in the late 1700s, managed to keep it from ever becoming endemic by quarantining arriving ships, and saw its last case in 1917. By 1966, it was endemic only in Africa, the Middle East, South and Southeast Asia, and Brazil.

Of course, even the countries that had eliminated endemic smallpox would occasionally have imported cases. And at the dawn of the jet age, the risk of global contagion was about to escalate. When overseas travel was slower than the progression of the disease itself, you could control it via quarantine. But with an incubation period of over a week, an infected patient, oblivious, could step on a plane and be halfway around the world long before showing any symptoms.

The stage was thus set for a final charge that would force smallpox into an unconditional surrender.

Global eradication

Smallpox was a good candidate for eradication, for several reasons: It could only infect humans, so there could be no animal reservoir of the disease. It was easy to diagnose and to distinguish from other diseases. There was a cheap and effective vaccine. And unlike diseases such as measles, it was only contagious when the obvious symptom, the rash of pustules, was apparent, not during the fever that preceded it.

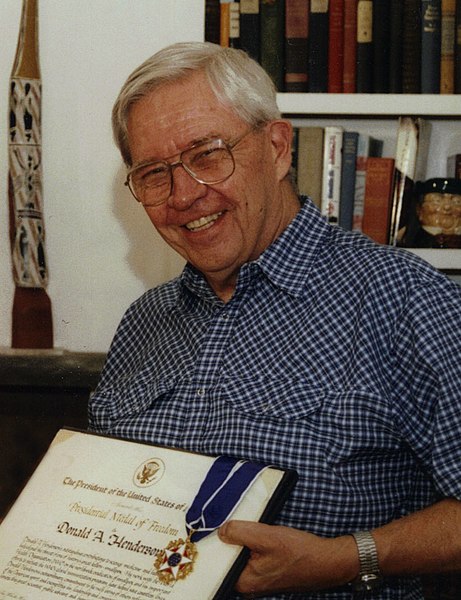

Still, many saw eradication as impossible or not worth the cost and effort, and it was hard to get agreement and resources for the project. The World Health Organization (WHO) resolved to eradicate smallpox in 1959, but devoted little resources to the project until a second resolution in 1966 (the so-called “Intensified” program, which was a euphemism for “we’re serious now”). The man chosen to lead the new effort was D. A. Henderson, chief of the surveillance section at the United States CDC (then the Communicable Disease Center, now the Centers for Disease Control).

I have a personal bias against large, non-profit, inter-governmental organizations, but given the awesomeness of this achievement, I expected that view to be seriously challenged, and thought I might come away from the story with a newfound respect for the WHO. Reading Henderson’s memoirs, though, reinforced for me how bureaucratic and political such organizations can be. There was a tremendous lack of internal alignment on goals and strategy, and regional directors often refused to cooperate with the program. Indeed, at the launch of the “intensified” effort, the WHO director-general, Marcelino Candau, was against the program, expecting it to fail, and wanted to give it as little publicity as possible. In Henderson’s telling of the story, at least, some of the individuals assigned to lead national efforts were simply incompetent, and there wasn’t much he could do except wait for them to be replaced. Henderson and his team worked apart from, and often around, the bureaucracy, and the effort succeeded not because of any grand unity of purpose at the top, but because of the industriousness of many managers in the middle, and the devoted effort of over a hundred thousand workers and volunteers on the ground.

Eradication: strategy and tactics

The defeat of smallpox was accomplished, as often in war, through superior artillery, surveillance, and surrounding the enemy.

The primary weapon needed was a tool to perform vaccinations quickly, cheaply and effectively, ideally with minimal training—and without reliable electricity. The basic lancet was less than ideal, requiring a delicate technique. At first, they used an army-designed jet injector that was easier to use and had a higher success rate, and could be powered with a hydraulic foot pedal. Then an even better tool was invented: the bifurcated needle, a short metal pin with a double point. It was simple, small, cheap, and it could be reused, after sterilization by boiling. Best of all, it used a tiny but still effective amount of vaccine, stretching a vial to four times the doses other applicators could achieve. It was so central to the success of the campaign that when it was over, Henderson awarded his team a lapel badge signifying the “Order of the Bifurcated Needle”.

But the most fascinating innovation to me was in strategy. At the beginning, it was assumed that the path to eradication was mass vaccination—indeed, this was what made some people skeptical of the entire goal. It wasn’t the sheer numbers that made mass vaccination a barrier, but the managerial difficulty of getting the last 10% of the population—some of whom were literally still living in tribes in the wilderness.

The assumption turned out to be false. The winning strategy, called “ring vaccination” or “surveillance and containment”, was to establish a network of health professionals who could quickly alert the nearest office to new smallpox cases; when alerted, they would immediately head to the scene and vaccinate everyone who had come in contact with the known cases, in a “ring” around them, to stop the disease’s spread. It was like fighting a forest fire with a controlled burn around the perimeter, instead of trying to douse the entire forest with water before a fire can start.

With new weapons and strategy, and through unflagging effort over more than a decade, the international project beat smallpox back into its final stronghold in the Horn of Africa. It made its last stand in Somalia in 1977, infecting a 23-year-old cook named Ali Maow Maalin—the last endemic case of smallpox in human history.

He lived.

How did it happen?

This blog seeks the roots of human progress. In 1720, inoculation had been a folk practice in many parts of the world for hundreds of years, but smallpox was still endemic almost everywhere. The disease had existed for at least 1,400 and probably over 3,000 years. Just over 250 years later—it was gone.

Why did it take so long, and how did it then happen so fast? Why wasn’t inoculation practiced more widely in China, India, or the Middle East, when it had been known there for centuries? Why, when it reached the West, did it spread faster and wider than ever before—enough to significantly reduce and ultimately eliminate the disease?

The same questions apply to many other technologies. China famously had the compass, gunpowder, and cast iron all before the West, but it was Europe that charted the oceans, blasted tunnels through mountains, and created the Industrial Revolution. In smallpox we see the same pattern.

I won’t try here to explain why the Scientific and Industrial Revolutions took place when and where they did, but just looking at the story of smallpox, we can see themes play out that should by now be familiar to readers of this blog:

The idea of progress. In Europe by 1700 there was a widespread belief, the legacy of Bacon, that useful knowledge could be discovered that would lead to improvements in life. People were on the lookout for such knowledge and improvements and were eager to discover and communicate them. Those who advocated for inoculation in 1720s England did so in part on the grounds of a general idea of progress in medicine, and they pointed to recent advances, such as using Cinchona bark (quinine) to treat malaria, as evidence that such progress was possible. The idea of medical progress drove the Suttons to make incremental improvements to inoculation, Watson to run his clinical trial, and Jenner to perfect his vaccine.

Secularism/humanism. To believe in progress requires believing in human agency and caring about human life (in this world, not the next). Although England learned about inoculation from the Ottoman Empire, it was reported that Muslims there avoided the practice because it interfered with divine providence—the same argument Reverend Massey used. In that sermon, Massey said in his conclusion, “Let them Inoculate, and be Inoculated, whose Hope is only in, and for this Life!” A primary concern with salvation of the immortal soul precludes concerns of the flesh. Fortunately, Christianity had by then absorbed enough of the Enlightenment that other moral leaders, such as Cotton Mather, could give a humanistic opinion on inoculation.

Communication. In China, variolation may have been introduced as early as the 10th century AD, but it was a secret rite until the 16th century, when it became more publicly documented. In contrast, in 18th-century Europe, part of the Baconian program was the dissemination of useful knowledge, and there were networks and institutions expressly for that purpose. The Royal Society acted as an information hub, taking in interesting reports and broadcasting the most important ones. Prestige and acclaim came to those who announced useful discoveries, so the mechanism of social credit broke secrets open, rather than burying them. Similar communication networks spread the knowledge of cowpox to from Fewster to Jenner, and gave Jenner a channel to broadcast his vaccination experiments.

Science. I’m not sure how inoculation was viewed globally, but it was controversial in the West, so it was probably controversial elsewhere as well. The West, however, had the scientific method. We didn’t just argue, we got the data, and the case was ultimately proved by the numbers. If people didn’t believe it at first, they had to a century later, when the effects of vaccination showed up in national mortality statistics. The method of meticulous, systematic observation and record-keeping also helped the Suttons improve inoculation methods, Haygarth discover his Rules of Prevention, and Fewster and Jenner learn the effects of cowpox. The germ theory, developed several decades after Jenner, could only have helped, putting to rest “miasma” theories and dispelling any idea that one could prevent contagious diseases through diet and fresh air.

Capitalism. Inoculation was a business, which motivated inoculators to make their services widely available. The practice required little skill, and it was not licensed, so there was plenty of competition, which drove down prices and sent inoculators searching for new markets. The Suttons applied good business sense to inoculation, opening multiple houses and then an international franchise. They provided their services to both rich and poor by charging higher prices for better room and board during the multiple weeks of quarantine: everyone got the same medical procedure, but the rich paid more for comfort and convenience, an excellent example of price differentiation without compromising the quality of health care. Business means advertising, and advertising at its best is a form of education, helping people throughout the countryside learn about the benefits of inoculation and how easy and painless it could be.

The momentum of progress. The Industrial Revolution was a massive feedback loop: progress begets progress; science, technology, infrastructure, and surplus all reinforce each other. By the 20th century, it’s clear how much progress against smallpox depended on previous progress, both specific technologies and the general environment. Think of Leslie Collier, in a lab at the Lister Institute, performing a series of experiments to determine the best means of preserving vaccines—and how the solution he found, freeze-drying, was an advanced technology, only developed decades before, which itself depended on the science of chemistry and on technologies such as refrigeration. Or consider the WHO eradication effort: electronic communication networks let doctors be alerted of new cases almost immediately; airplanes and motor vehicles got them and their supplies to the site of an epidemic, often within hours; mass manufacturing allowed cheap production at scale of needles and vaccines; refrigeration and freeze-drying allowed vaccines to be preserved for storage and transport; and all of it was guided by the science of infectious diseases—which itself was by that time supported by advanced techniques from X-ray crystallography to electron microscopes.

What about institutions? This is less clear to me. Mass inoculation or vaccination happened in cities and towns when epidemics struck, and these would probably have been organized in part by local government. But how did vaccination spread more broadly? None of the sources I read said much about this. Compulsion by law seems to have helped, although some areas achieved results almost as good without compulsory vaccination, while in others attempts at compulsion weren’t enforced—perhaps culture is more important than law here. Other factors included schools requiring vaccination of students, the police and military requiring it of their recruits, and insurance companies requiring it for life insurance (or demanding an extra premium for the unvaccinated). This is an interesting area for further research.

Finally, what about the eradication effort? The WHO seems important in this, although it’s unclear to me exactly how. I would say they contributed leadership, except that as mentioned the director-general was against the program, so it may have been through sheer luck that the machine of politics approved the program and gave it to a competent manager. What about funding? The program was surprisingly cheap: it cost $23 million/year between 1967 and 1969; adjusted for inflation that is well under $200 million/year in 2019. Total US private giving just to overseas programs is over $40 billion; less than 1% of that would pay for eradication were it needed today. The Gates Foundation alone gave away $5 billion in 2018; if smallpox were still around, Bill could easily fund eradication himself—and he probably would. If anything, I suspect what the WHO contributed was a mission and a forum in which someone could actually think that eradication was their job, and just enough authority and clout to make international cooperation possible (though not easy, according to Henderson).

Could smallpox return?

Smallpox is gone. Or is it?

The disease had disappeared from humanity, and the virus could not last long outside a human host. There was no animal reservoir. There were stocks of the virus for research, but they were destroyed in the 1980s—except for two, highly secured laboratories that were allowed to keep them. Owing to Cold War politics (perhaps a concept of “mutually assured destruction” for biological weapons), one of those laboratories was at the CDC in Atlanta, Georgia; the other was in Russia at the State Center for Research on Virology and Biotechnology, known as “VECTOR”.

According to a 1972 international convention, any biological weapons research programs were supposed to have been destroyed. But in secret, the Soviets had been developing biological weapons, including smallpox. After the fall of the Soviet Union, many scientists in the program defected to other countries. We cannot know which of them may have taken vials of the virus with them, or where covert biological weapons programs may be going on now.

A new smallpox epidemic is a nightmare to contemplate. Routine vaccination has not been performed in forty years, and most of the public is susceptible. There are vaccine stocks, but not enough to vaccinate the world. Most doctors have never seen a smallpox case in their lives, and most health workers have not been trained to handle one. Modern transportation systems would scatter the disease around the globe faster than we could track it—and modern social networks would spread fear, disgust, and misinformation faster than we could counter it, about the disease itself and the vaccines that could actually protect people from it. With or without evidence, there would be accusations that it had been released deliberately as a biological attack by a nation-state or terrorist organization.

But there would be room for hope. The smallpox vaccine is still manufactured for limited use, kept in the Strategic National Stockpile. (It’s not made with live cows anymore, but in cell cultures.) And in a global epidemic, humanity would surely swing into action, uniting (however briefly) against a common, microbiological foe. Vaccine production would be ramped up, and perhaps improved vaccines with few to no side effects could be created (some were in research phase around the time of eradication). Antiviral drugs, which were not available in the smallpox era, could be developed (one, tecovirimat, has already been FDA-approved for smallpox). The disease, remember, is only contagious when the telltale rash is apparent, making it easier to establish isolation and quarantine. And most importantly, the knowledge of how to fight smallpox has not been lost—it is, in fact, preserved in a 1,400-page book published by the WHO.

The future of disease eradication

Smallpox was the first disease to be eradicated, but it doesn’t have to be the last.

Polio may be next. Two out of three strains of polio have already been eradicated, and worldwide there are fewer than 1,000 wild poliovirus cases per year (in some years, fewer than 100). It is considered endemic in only three remaining countries: Afghanistan, Pakistan and Nigeria. But the effort has taken longer than smallpox did. In 1988, the WHO set a goal of polio eradication by the year 2000, and the goal has not been met. Complicating the efforts is the fact that, of the two polio vaccines, both require multiple doses, and one requires refrigeration. Also, detection is more difficult, since many people can carry the disease without showing obvious symptoms such as paralysis.

Other diseases will be even more difficult. Yellow fever can infect animals, meaning that even if it were eliminated from all humans, it could return from the animal reservoir. Cholera can last for a long time in water. Tuberculosis can be latent for a long time in humans. HIV does not yet have an effective vaccine, nor does malaria (although tropical diseases may be eliminated in the future with genetic engineering, such as modifying mosquitoes with a “gene drive”). Measles is a better candidate, but it is more contagious than smallpox, and it is contagious before its rash is apparent, making isolation harder.

But, to quote David Deutsch, anything not forbidden by the laws of nature is achievable, given the right knowledge. No law of physics says diseases must exist. And so, if human knowledge, technology, wealth, and infrastructure continue to progress, I believe humanity will see the end of disease.

Thomas Jefferson, a strong advocate of Jenner’s new vaccine, wrote to him: “Future nations will know by history only that the loathsome small-pox has existed and by you has been extirpated.” Someday, perhaps, that will be said of disease itself.

Sources and further reading

Defying Providence: Smallpox and the Forgotten 18th-Century Medical Revolution, by Arthur Boylston. A few articles related to the book are also available at the James Lind Library

Smallpox: The Death of a Disease, by D. A. Henderson

Smallpox and Its Eradication, published by the WHO

Articles at Our World in Data, The Centers for Disease Control, the World Health Organization, and the National Institutes of Health

Leslie Collier’s original papers: “The Preservation of Vaccinia Virus” and “The Development of a Stable Smallpox Vaccine”

On freeze-drying: “A World Where Blood Was Made Possible”, or go for Greaves’s original paper “The Preservation of Proteins by Drying”

The Reverend Edmund Massey’s “Sermon Against the Dangerous and Sinful Practice of Inoculation”

Also: The History of Vaccines, Wikipedia

from Hacker News https://ift.tt/334hjEN